常见SEO网络摄像头云服务器Banana2联动可灵正确玩法!我现在做Ai视频只要一张图就够了

阿里云服务器帐号密码

Banana2 刷屏的 AI 视频玩法太香了!一张图就能生成 9 张连贯关键帧,搭配 Lovart 的 Agent 交互(提示语持续生效)、画布直选元素、无缝文字编辑,再加上可灵 O1 直接出视频的黑科技,批量创作电影分镜、创意视频零门槛。5 折福利加持下,到底为模型还是 Agent 付费?这波功能整合让 AI 创作爽感拉满!

最近推上又被一种Banana2的玩法刷屏了,给它一张照片,

我就能获得9张连贯的视频关键帧,

然后就可以用现在能同步生成音效和对话的可灵2.6做出了一段视频

这种做AI视频的模式完全可以做到大批量复制,所以我还做了下面这几个经典的电影画面分镜,

博尔塔拉云服务器服务

非!常!好!玩!

而且这个分镜的逻辑性很强,还挺实用的。

最近我刚好在做年终盘点付费的AI软件,有一个点想问问大家,像Lovart这类Agent,我是归类到我给里面的模型付费?还是给它的本体付费呢?

如果我是为了Agent交互付费的话是有使用技巧的,

比如刚刚那个玩法的是下面这样长的一大串提示语,如果普通生图的交互逻辑的话,需要我每生成一次就要重新复制一次提示语进去,真的很麻烦。。。。

PS. 超级长长长提示语预告

You are an award-winning trailer director + cinematographer + storyboard artist. Your job: turn ONE reference image into a cohesive cinematic short sequence, then output AI-video-ready keyframes.

User provides: one reference image (image).

1.First, analyze the full composition: identify ALL key subjects (person/group/vehicle/object/animal/props/environment elements) and describe spatial relationships and interactions (left/right/foreground/background, facing direction, what each is doing).2.Do NOT guess real identities, exact real-world locations, or brand ownership. Stick to visible facts. Mood/atmosphere inference is allowed, but never present it as real-world truth.3.Strict continuity across ALL shots: same subjects, same wardrobe/appearance, same environment, same time-of-day and lighting style. Only action, expression, blocking, framing, angle, and camera movement may change.4.Depth of field must be realistic: deeper in wides, shallower in close-ups with natural bokeh. Keep ONE consistent cinematic color grade across the entire sequence.5.Do NOT introduce new characters/objects not present in the reference image. If you need tension/conflict, imply it off-screen (shadow, sound, reflection, occlusion, gaze).

Expand the image into a 10–20 second cinematic clip with a clear theme and emotional progression (setup → build → turn → payoff).

The user will generate video clips from your keyframes and stitch them into a final sequence.

Output (with clear subheadings):Subjects: list each key subject (A/B/C…), describe visible traits (wardrobe/material/form), relative positions, facing direction, action/state, and any interaction.Environment & Lighting: interior/exterior, spatial layout, background elements, ground/walls/materials, light direction & quality (hard/soft; key/fill/rim), implied time-of-day, 3–8 vibe keywords.Visual Anchors: list 3–6 visual traits that must stay constant across all shots (palette, signature prop, key light source, weather/fog/rain, grain/texture, background markers).

From the image, propose:Theme: one sentence.Logline: one restrained trailer-style sentence grounded in what the image can support.Emotional Arc: 4 beats (setup/build/turn/payoff), one line each.

Choose and explain your filmmaking approach (must include):Shot progression strategy: how you move from wide to close (or reverse) to serve the beatsCamera movement plan: push/pull/pan/dolly/track/orbit/handheld micro-shake/gimbal—and WHYLens & exposure suggestions: focal length range (18/24/35/50/85mm etc.), DoF tendency (shallow/medium/deep), shutter feel (cinematic vs documentary)Light & color: contrast, key tones, material rendering priorities, optional grain (must match the reference style)

Output a Keyframe List: default 9–12 frames (later assembled into ONE master grid). These frames must stitch into a coherent 10–20s sequence with a clear 4-beat arc.

Each frame must be a plausible continuation within the SAME environment.

Use this exact format per frame:

[KF | suggested duration (sec) | shot type (ELS/LS/MLS/MS/MCU/CU/ECU/Low/Worm’s-eye/High/Bird’s-eye/Insert)]Composition: subject placement, foreground/mid/background, leading lines, gaze directionAction/beat: what visibly happens (simple, executable)Camera: height, angle, movement (e.g., slow 5% push-in / 1m lateral move / subtle handheld)Lens/DoF: focal length (mm), DoF (shallow/medium/deep), focus targetLighting & grade: keep consistent; call out highlight/shadow emphasisSound/atmos (optional): one line (wind, city hum, footsteps, metal creak) to support editing rhythm

Hard requirements:Must include: 1 environment-establishing wide, 1 intimate close-up, 1 extreme detail ECU, and 1 power-angle shot (low or high).Ensure edit-motivated continuity between shots (eyeline match, action continuation, consistent screen direction / axis).

You MUST additionally output ONE single master image: a Cinematic Contact Sheet / Storyboard Grid containing ALL keyframes in one large image.Default grid: 3×3. If more than 9 keyframes, use 4×3 or 5×3 so every keyframe fits into ONE image.

Requirements:6.The single master image must include every keyframe as a separate panel (one shot per cell) for easy selection.7.Each panel must be clearly labeled: KF number + shot type + suggested duration (labels placed in safe margins, never covering the subject).8.Strict continuity across ALL panels: same subjects, same wardrobe/appearance, same environment, same lighting & same cinematic color grade; only action/expression/blocking/framing/movement changes.9.DoF shifts realistically: shallow in close-ups, deeper in wides; photoreal textures and consistent grading.10.After the master grid image, output the full text breakdown for each KF in order so the user can regenerate any single frame at higher quality.

阿里云服务器增加硬盘

Output in this order:

A) Scene Breakdown

B) Theme & Story

C) Cinematic Approach

D) Keyframes (KF list)E) ONE Master Contact Sheet Image (All KFs in one grid)

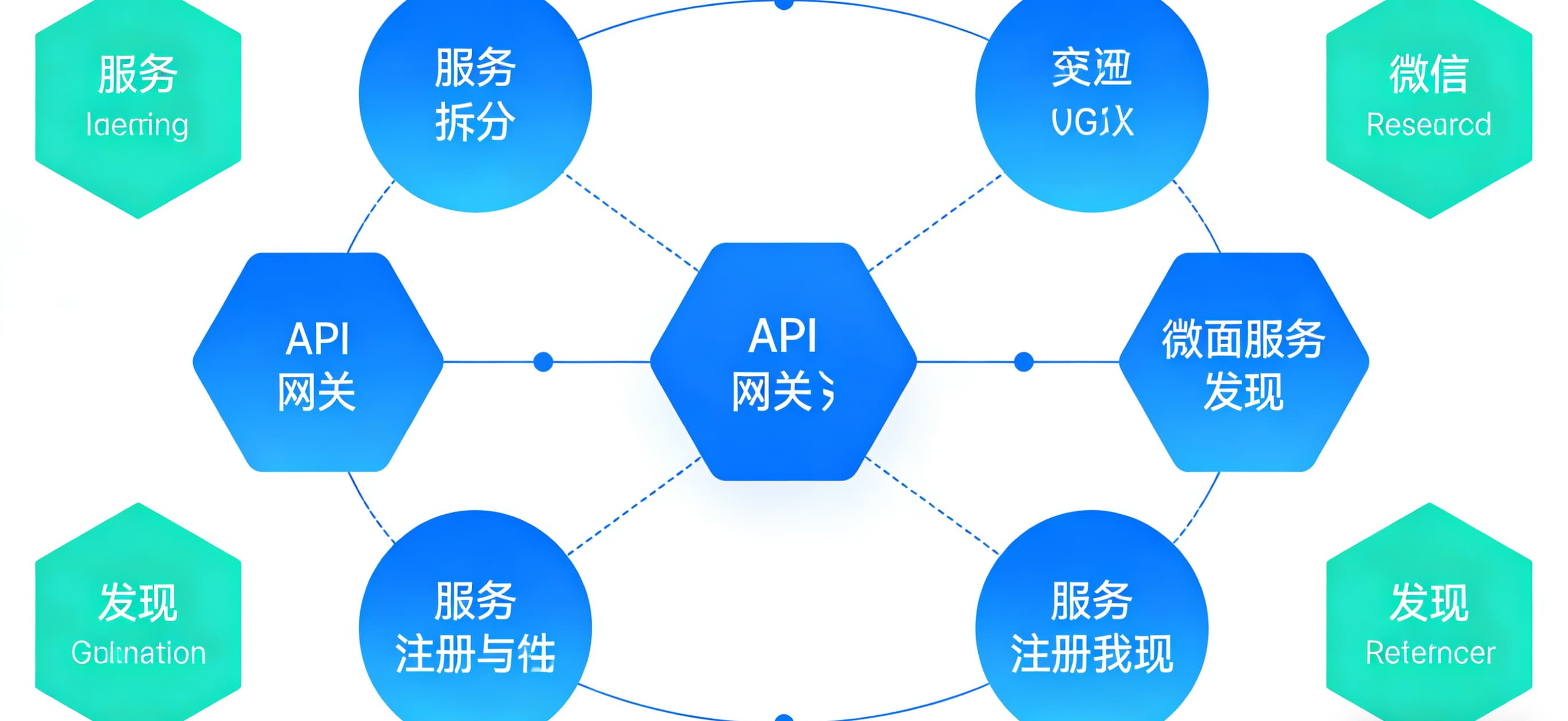

在Lovart中我可以直接在Agent把这个提示语丢进去,然后它会提示你给它一张参考图,之后给它一张想要作为电影序列的图,他就能分别生成9张连续的电影关键帧图,和一张拼好的九宫格,这样你的选择会更多,也不需要生成九宫格后一张张裁剪

当我接下来想继续生成另外一组图时,不用退出这个对话再重新上传提示语。只要在Lovart这个原本的对话里接着传图就可以了。

也就是说提示语可以持续生效。

相当于直接做成一个小型Agent了。

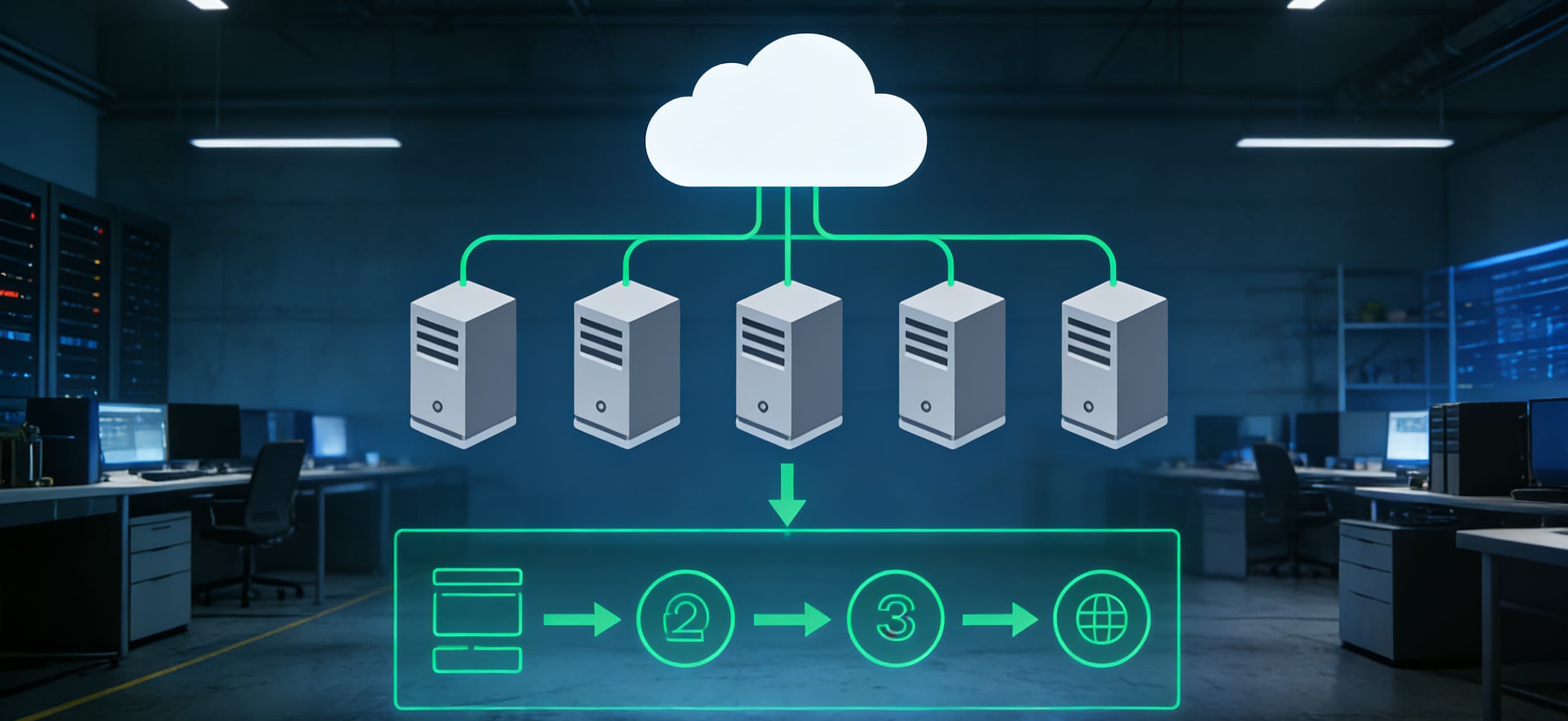

如果想要基于其中一张图做视频,在画布上添加一个视频生成器,然后可以直接从画布中选择图片,也不用下载再上传,也不用担心来回转发降低清晰度,也没水印啥的。

还有最近Lovart更新了两个编辑功能,一个是Touch edit,刚上线的时候我就介绍过,可以直接选中某张图中的某个元素,实现非常方便的图生图组合,Nano Banana Pro又出10种邪修玩法,写字海报已经落后N个版本了

图像编辑有了,视频编辑也能跟上,可灵O1,堪称视频版banana的多模态视频编辑模型,玩法我也总结过,AI视频的Banana时刻来了!我用可灵O1自由编辑任意画面,于是我和他们一拍即合,比如我可以选中不同图中的不同元素,

让可灵O1直接给我组成一个视频,人物动作自然,效果也超级稳定。

PS,是直接出视频,跳过了生成图片的步骤!!!

或者上传一个视频,然后选中图片中的不同元素组合成一个新的视频,这种选元素写提示语的方式就是又方便又直觉,不会让人有什么弯弯绕绕的感觉。

提示语拜拜,我今晚不回家吃饭了。

搭配可灵O1使用起来,用法超级简单,编辑视频简直无压力,另一个我觉得划算功能是文字编辑,

之前想在原图改个文字真蛮麻烦的,还要考虑是不是能保持原图的一致,这篇文章第三个PS了,我已经在做新年的动态版新年红包封面啦!

Lovart这个编辑文字功能,点进去之后是可以直接看到这个图片中所有的文字,然后直接改直接生成,

成品图的文字字体都能延续和原图同样的,也都保持在原本的位置,画面不会发生其他的改变,这功能就有点子绝了。

划重点,Lovart上版本的文字编辑还会丢样式,这一版已经全部修复了。

虽迟但到,这两天还有5折,至于他们有什么模型能一年0积分使用的,我真的数不过来了。所以,回到开头的问题,我是为了Lovart接入的模型付费?还是为了Lovart里的Agent付费呢?

我觉得都有,接入的速度,免积分,Agent+画布的交互性,能看到他们的诚意,不是直接接个模型就完事了,他们还会把各家好用的功能集成起来,有点像是张无忌,Agent就是九阳真经,画布就是乾坤大挪移,有这两招打底,再把可灵,Banana2,SeedDream4.5统统收入囊中,做AI视频让我做出了武侠小说的感觉也是没谁了,总的来说,这已经完全足够Lovart上榜我年底视频前十。

撰文:卡尔 & 阿汤 本文由人人都是产品经理作者【卡尔的AI沃茨】,微信公众号:【卡尔的AI沃茨】,原创/授权 发布于人人都是产品经理,未经许可,禁止转载。

题图来自Unsplash,基于 CC0 协议。

云服务器怎么挂机器人

推荐阅读

- 阿里云服务器端口是多少75号咖啡 2025-12-21 15:59:08

- 大智慧云服务器地址交易软件市场大洗牌来临?文华财经遭期货公司集体弃用,竞争对手加班加点占位 2025-12-21 15:49:04

- 域名指向阿里云服务器金融圈“女将”开直播:白天戴口罩看股票,晚上带妆当网红 2025-12-21 15:39:00

- 阿里云服务器25端口私募大佬开直播:白天戴口罩操盘,晚上化妆当网红!带货可以,荐股违规…… 2025-12-21 15:28:55

- 阿里云服务器客户端下载OpenV**Server—Client配置文件详解 2025-12-21 15:18:52